Anomaly Detection is the silent guardian of your data, constantly at work behind the scenes.

Are hidden issues silently draining your business’s revenue, jeopardizing your security, or skewing your crucial data insights?

In today’s hyper-connected, data-rich world, unexpected deviations can appear anywhere from a sudden dip in website conversions to a subtle, yet dangerous, shift in network traffic.

These “anomalies,” often invisible to the naked eye, hold the key to uncovering both significant threats and untapped opportunities.

It’s a powerful discipline at the intersection of data science, AI, and machine learning, focused on identifying those rare events, observations, or data points that diverge significantly from the expected pattern.

More than just a buzzword, anomaly detection is becoming an indispensable tool for businesses aiming to stay competitive, secure, and data-driven.

In this comprehensive guide, we’ll peel back the layers of anomaly detection.

You’ll learn exactly what it is, why it’s not just a technicality but a strategic imperative for your business, its various types, how it works, and its impactful real-world applications.

We’ll also address common challenges and best practices for implementation, ultimately showing you how Tatvic empowers businesses like yours to harness this technology for smarter, safer operations.

What is Anomaly ?

An anomaly is any data point, event, or observation that significantly deviates from the normal or expected pattern within a dataset.

It’s an outlier or an irregularity that doesn’t conform to the typical behavior or trend.

These unusual occurrences can signal anything from a data error to a critical system issue, a fraudulent activity, or an unexpected but important business trend.

What Exactly is Anomaly Detection?

At its core, Anomaly Detection (also known as Outlier Detection) is the process of identifying data points or patterns that do not conform to an expected behavior.

Think of it as finding the “needle in the haystack” that one data point, or a sequence of points, that is significantly different from the vast majority of other data points.

While “normal” data often follows predictable trends and distributions, anomalies stick out because they violate these norms.

These deviations can be caused by various factors, including:

- Errors: Data entry mistakes, sensor malfunctions.

- Rare Events: A sudden, legitimate spike in sales due to a viral campaign.

- Malicious Activity: Fraudulent transactions, cyber-attacks.

- System Failures: Server crashes, network outages.

Unlike simple thresholding, which flags values above or below a fixed limit, anomaly detection often involves sophisticated algorithms that learn the “normal” baseline behavior from historical data.

This allows it to identify subtle, complex, or evolving abnormalities that a static rule might miss. It’s about understanding the context and the relationships within the data, rather than just isolated values.

The ‘Why’: Why Anomaly Detection is Critical for Modern Businesses

In an era where data is king, overlooking anomalies is akin to leaving money on the table or an open door for threats.

Anomaly detection is not just a ‘nice-to-have’ technical capability; it’s a strategic ‘must-have’ that delivers tangible business benefits across various functions.

Importance Of Anomaly Detection In Various Business Functions:

1. Safeguarding Revenue & Preventing Fraud

Fraudulent activities, whether in financial transactions, advertising clicks, or e-commerce purchases, can lead to substantial revenue loss. Anomaly detection systems continuously monitor patterns, flagging suspicious transactions, unusual login attempts, or abnormal clickstream data that deviate from typical user behavior, allowing for rapid intervention and prevention.

2. Enhancing Operational Efficiency & Predictive Maintenance

For businesses with physical assets or complex IT infrastructures, anomalies can signal impending equipment failure or operational bottlenecks. By detecting unusual sensor readings (e.g., temperature spikes, abnormal vibrations) or unexpected latency in IT systems, companies can perform predictive maintenance, minimize downtime, and optimize operational costs, moving from reactive fixes to proactive solutions.

3. Optimizing Digital Marketing & User Experience

For Tatvic’s core audience, this is paramount.

Anomaly detection can transform digital marketing efforts:

- Website Traffic: Identify sudden, inexplicable drops or spikes in traffic that could indicate bot activity, tracking errors, or a trending issue.

- Conversion Rates: Pinpoint abnormal shifts in conversion rates for specific campaigns, channels, or user segments, allowing for quick A/B test adjustments or problem resolution.

- Ad Spend & Performance: Flag unusual ad spend patterns, click fraud, or campaigns underperforming unexpectedly, ensuring budget efficiency.

- Customer Journey: Spot deviations in user behavior sequences that might indicate frustration, confusion, or a new successful path.

4. Improving Data Quality & Accuracy

Garbage in, garbage out. Anomalies within your datasets can skew analysis, leading to incorrect conclusions and flawed strategies. Anomaly detection helps cleanse data by identifying erroneous entries, sensor glitches, or data collection issues, ensuring that your analytics and machine learning models are built on a solid foundation of reliable data.

5. Strengthening Cybersecurity & IT Operations

In the face of ever-evolving cyber threats, anomaly detection is a first line of defense. It helps identify:

- Unusual Network Activity: Malicious intrusions, data exfiltration, or unauthorized access attempts.

- System Performance Degradation: Subtle shifts indicating a server overload or an application issue before it escalates.

- Insider Threats: Employee behaviors that deviate from their normal work patterns.

6. Gaining Competitive Advantage

By proactively identifying and addressing issues, optimizing performance, and preventing losses, businesses leveraging anomaly detection gain a significant edge. They can react faster to market changes, maintain higher levels of service quality, and make data-driven decisions with greater confidence.

What Are The Types of Anomalies? Understanding the Deviations

Not all anomalies are created equal.

Understanding the different types helps in selecting the most appropriate detection methods and interpreting their significance.

Let’s Explore Different Types Of Anomalies:

1. Point Anomalies (Outliers)

These are single data instances that deviate significantly from the rest of the dataset.

- Example: A credit card transaction of $10,000 in an account where the average transaction is $50.

- Example in Marketing: A single blog post suddenly receiving 10x its usual traffic for no apparent reason, or a single day with 0 conversions on an otherwise active e-commerce site.

2. Contextual Anomalies

A data instance is anomalous only in a specific context. It might be normal in a different context.

- Example: A temperature of 30°C in a server room is normal during summer but highly anomalous in winter.

- Example in Marketing: A spike in website traffic at 2 AM is normal for a global product launch, but anomalous for a local business on a typical weekday.

3. Collective Anomalies

A collection of related data instances that collectively behave anomalously, even if individual data points within the collection are not anomalous.

- Example: A gradual, sustained decrease in network bandwidth over several hours, which individually might seem small, but collectively indicates a problem.

- Example in Marketing: A consistent, but slight, drop in session duration across multiple pages over a week, signaling potential user frustration that isn’t evident from individual page metrics.

How Anomaly Detection Works: Methods & Techniques

The core idea behind anomaly detection is to build a model of “normal” behavior and then identify instances that deviate significantly from this model.

The methodologies employed can range from simple statistical rules to complex machine learning algorithms.

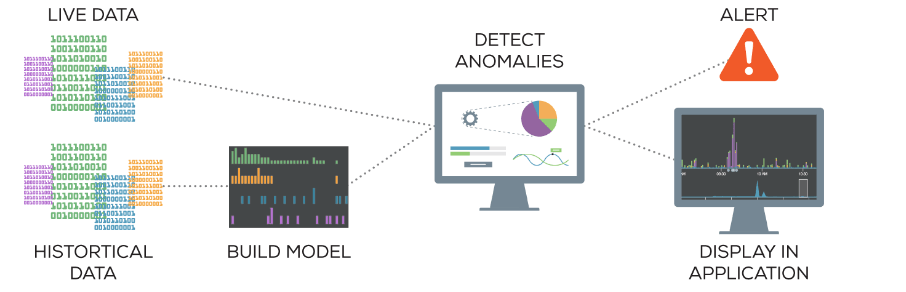

1. Overview of the Process:

- Data Collection: Gathering relevant data streams (e.g., logs, sensor data, transaction records, website analytics).

- Data Preprocessing: Cleaning, normalizing, and transforming data to suit the chosen method.

- Model Training: Teaching the algorithm what “normal” looks like based on historical data.

- Anomaly Scoring/Classification: Assigning an anomaly score to new data points or classifying them as normal/anomalous.

- Alerting & Action: Notifying relevant personnel or triggering automated responses when anomalies are detected.

2. Statistical Methods

These are often the simplest forms, suitable for data with clear statistical properties.

- Z-score/Standard Deviation: Identifies data points that are a certain number of standard deviations away from the mean.

- Interquartile Range (IQR): Flags values outside a certain range based on quartiles, robust to outliers.

- Gaussian Models: Assumes data follows a normal distribution and flags points with low probability under this distribution.

3. Machine Learning Approaches

These are more sophisticated and can learn complex, non-linear relationships, making them suitable for high-dimensional or complex data.

- Clustering-based: Algorithms like K-means or DBSCAN group similar data points together. Anomalies are data points that fall outside these clusters or form very small, isolated clusters.

- Classification-based: Training a classifier (e.g., One-Class SVM) on “normal” data. Any new data point not classified as normal is an anomaly.

- Proximity-based: Methods like Isolation Forest or Local Outlier Factor (LOF) identify anomalies by how “isolated” or how different they are from their neighbors.

- Deep Learning: Neural networks like Autoencoders can learn compressed representations of normal data. Data points that reconstruct poorly (high reconstruction error) are considered anomalous.

4 Time Series Specific Methods

When dealing with sequential data (like website traffic or server logs), specialized methods account for temporal dependencies.

- ARIMA (Autoregressive Integrated Moving Average): Models past values to predict future ones, flagging significant deviations from predictions.

- Prophet (Facebook): Designed for forecasting time series data with trends, seasonality, and holidays, useful for detecting anomalies against a predicted baseline.

Anomaly Detection Method (Practical Approach)

Machine learning is useful to learn the characteristics of the system from observed data.

Common anomaly detection methods on time series data learn the parameters of the data distribution in windows over time and identify anomalies as data points that have a low probability of being generated from that distribution.

Another class of methods include sequential hypothesis tests like cumulative sum (CUSUM) charts, sequential probability ratio test (SPRT) etc., which can identify certain types of changes in the distributions of real-time data. All these methods use some predefined thresholds to alert to changes in the distributions of time series data.

At their core, all methods test if the sequence of values in a time series is consistent to have been generated from an i.i.d (independent and identically distributed) process.

This approach becomes infeasible and impractical for environments and infrastructures which are complex and of extremely large scale. As the complexity increases, fixed thresholds can’t adapt to the volume of data changing over time.

At Tatvic, we define anomaly detection as the identification of data points which deviate from normal and expected behaviour.

For example, in our system, we use historical data to construct a quantitative representation of data distribution exhibited by each metric being monitored.

Real-time data points are compared against these quantitative representations and are assigned a score.

A decision is made on whether the real-time data point is an anomaly or not, based on a threshold we derive from recent observations of the data. One of the key advantages of this approach is that the thresholds are not static, but rather, evolve with data.

Let me simplify further with an example of a website traffic data pattern. Tatvic’s solution starts with collecting the website’s behavioural data.

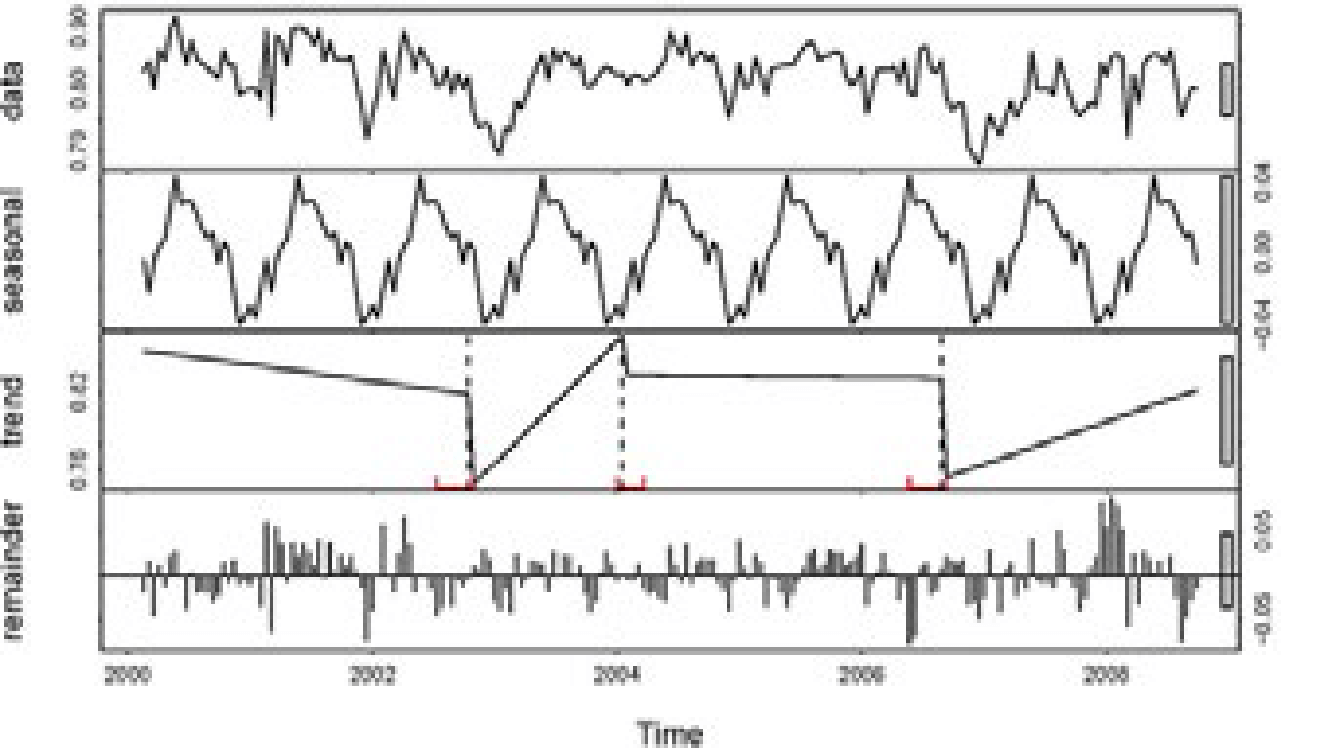

Three primary components of trend, namely fixed trends, cyclical trends, and seasonal data are measured individually and aggregated.

The system collects behavioural and conversion data with Google Analytics 360 and uses the Real Time Management APIs to export relevant metrics into a separate database.

Then, the algorithm looks for unexpected changes in the data and sends automated real-time alerts to concerned users using an SMS API and email server.

Some of the meticulously tracked metrics could be online-form completion rates, goal conversion rates, and page-loading time for specific browsers and operating systems.

This solution is built using R – a popular, robust and free programming language – for statistical computing and visualization.

Google Analytics 360 data can be exported into R for advanced analysis through the RGoogleAnalytics Library.

With this, Tatvic can decouple data between trends and seasonality as shown below.

Real-World Applications & Industry Use Cases

Anomaly detection isn’t confined to a single industry; its versatility makes it invaluable across diverse sectors:

1. Digital Marketing & Analytics (Tatvic’s Expertise!)

- Traffic Anomalies: Sudden, unexplained spikes in bot traffic, or drops in organic search volume for key terms.

- Conversion Rate Drops: Unexpected dips in checkout conversion rates, form submissions, or lead generation.

- Campaign Performance: Ad campaigns showing unusually high click-through rates (potential click fraud) or abnormally low return on ad spend.

- A/B Test Monitoring: Detecting if an A/B test is producing anomalous results that might indicate a technical issue rather than a genuine user preference.

- Customer Journey Analysis: Identifying unusual navigation paths that might indicate user frustration or new, unexpected success funnels.

2. Financial Services & Fraud Detection

- Credit card fraud, money laundering, insurance claim fraud, insider trading detection.

- Example: A bank flagging a series of small, rapid transactions in an unusual location, followed by a large withdrawal.

3. IT Operations & Network Security

- Network intrusions, denial-of-service (DoS) attacks, unusual access patterns, server performance degradation.

- Example: An IT team identifying an employee logging in from an unfamiliar country at an odd hour.

4. Manufacturing & IoT

- Predictive maintenance for machinery, quality control defect detection, supply chain disruptions.

- Example: Sensors on a factory machine detecting abnormal vibrations or temperature, signaling a potential breakdown before it occurs.

5. Healthcare

- Patient vital sign monitoring, disease outbreak detection, medical insurance fraud.

- Example: An automated system alerting doctors to a sudden, inexplicable change in a patient’s heart rate patterns.

Anomaly Detection Case Study!

Magicbricks is India’s top high-end property portal. The website caters to a global market with its unique services and novel online features for both- buyers and sellers.

Given their commitment to user experience and performance across their site’s global operations, they needed a real-time solution to monitor and optimize against their key digital KPIs.

Tatvic helped the company to develop a way to reduce website downtime and promptly alert management when outages would occur.

Using our real-time anomaly alerts platform, the company could clearly see variances in KPIs and move quickly to troubleshoot and fix them.

One day, the page leads generated reduced below the threshold limit and this triggered alerts and an email was immediately sent to the relevant team to take corrective steps on the same day; otherwise, probably a substantial chunk of leads would have been lost.

Result: 70% faster response time

The automated SMS alert system is used to alert outages, determine severity, and provide real-time performance updates to senior and middle managers throughout Magicbricks.

Today, the entire Magicbricks team can make the most of these real-time insights to keep the site up and running and earning more every day.

The full story of anomaly detection for Magicbricks can be found on the Google Analytics blog 👉 here.

Overcoming Challenges in Anomaly Detection

While powerful, implementing anomaly detection is not without its hurdles:

-

Defining ‘Normal’ is Hard:

What constitutes “normal” can be highly subjective, dynamic, and evolve over time, making it challenging to build robust models.

-

Data Scarcity for Anomalies:

Anomalies are rare by definition. This scarcity makes it difficult to train models that accurately recognize them, leading to imbalanced datasets.

-

High Dimensionality:

Modern datasets often have hundreds or thousands of features (dimensions), making it harder to discern patterns and spot anomalies.

-

Evolving Patterns:

What was normal last month might be anomalous today, and vice-versa. Models need to adapt to concept drift.

-

False Positives/Negatives:

Overly sensitive models can flood you with false alarms (false positives), leading to alert fatigue. Under-sensitive models might miss critical anomalies (false negatives).

-

Interpretability:

Understanding why an anomaly was flagged can be challenging, especially with complex deep learning models, making it hard to take targeted action.

Implementing Anomaly Detection: Best Practices for Success

To maximize the effectiveness of your anomaly detection efforts, consider these best practices:

-

Start with Clear Objectives:

Define what you want to achieve. Are you preventing fraud, optimizing marketing spend, or predicting system failures? Clear goals guide your data collection and model selection.

-

Ensure Data Quality & Preprocessing:

Clean, consistent, and relevant data is foundational. This includes handling missing values, normalizing features, and feature engineering.

-

Select the Right Algorithm:

There’s no one-size-fits-all. The best method depends on your data type (time series, categorical, numerical), the nature of anomalies, and your specific problem.

-

Continuous Monitoring & Feedback Loops:

Anomaly detection models are not set-it-and-forget-it solutions. Regularly monitor their performance, update models with new data, and incorporate human feedback to refine accuracy.

-

Integrate with Existing Systems:

To make anomaly detection actionable, integrate it with your alerting systems (e.g., Slack, email), dashboards (e.g., Google Looker Studio, Power BI), and operational tools.

-

Start Simple, Then Scale:

Begin with simpler methods and gradually move to more complex ones as your understanding of the data and problem deepens.

-

Focus on Actionable Insights:

The goal isn’t just to find anomalies, but to act on them. Ensure your system provides enough context for teams to understand and address the flagged issues.

How Tatvic Empowers Businesses with Anomaly Detection

At Tatvic, we understand that true business intelligence comes from not just seeing the data, but understanding the subtle shifts that impact your bottom line.

We move beyond generic tools, offering tailored Anomaly Detection solutions that integrate seamlessly with your existing data ecosystem.

Tatvic’s Approach To Empower Businesses with Anomaly Detection:

-

Custom Model Development:

Leveraging our expertise in AI and Machine Learning, we build bespoke anomaly detection models tuned to your specific business needs, data types, and operational context. Whether it’s detecting fraudulent transactions in finance or pinpointing unusual customer behavior in e-commerce, our solutions are purpose-built.

-

Integration with Leading Analytics Platforms:

We seamlessly integrate anomaly detection capabilities with your existing tools, including Google Analytics 4, Adobe Analytics, Google BigQuery, and other cloud data platforms. This means you get real-time alerts and insights directly within your familiar dashboards.

-

Proactive Alerting & Visualization:

Beyond just detection, we focus on making anomalies actionable. We design intuitive dashboards and automated alerting systems that notify the right teams instantly, providing context and severity assessments to enable rapid response.

-

Data Health Monitoring:

Our solutions help you maintain high data quality, identifying inconsistencies or errors that could skew your analytics and impact business decisions.

-

Fraud & Security Optimization:

For digital marketing, we implement sophisticated models to identify and mitigate various forms of ad fraud, click fraud, and unusual user behavior, protecting your marketing spend and brand reputation.

-

End-to-End Implementation & Support:

From initial data assessment and model training to deployment, monitoring, and ongoing refinement, Tatvic provides comprehensive support, ensuring you maximize the value of anomaly detection without heavy internal resource allocation.

Success Story Snippet: Imagine a leading e-commerce client who was struggling with unexplained drops in conversion rates during peak seasons. Tatvic implemented a real-time anomaly detection system that identified specific product categories experiencing unusual traffic sources and checkout flow errors, enabling the client to rectify issues within hours, preventing significant revenue loss.

The Future of Anomaly Detection

The field of anomaly detection is constantly evolving, driven by advancements in AI and the increasing complexity of data.

Anomaly Detection Key trends to watch :

-

Real-time Detection at Scale:

The ability to detect anomalies instantaneously across massive, streaming datasets will become standard.

-

Explainable AI (XAI):

As models become more complex, XAI will be crucial to provide human-understandable explanations for why an anomaly was flagged, fostering trust and enabling quicker action.

-

Automated Anomaly Resolution:

Moving beyond just alerting, future systems may suggest or even initiate automated responses to certain types of anomalies.

-

Multi-Modal Anomaly Detection:

Integrating data from various sources (e.g., text, images, sensor data, time series) to detect more complex, holistic anomalies.

Conclusion

In a world drowning in data, anomaly detection emerges as a lifeline transforming raw data into actionable intelligence.

It’s the silent guardian protecting your business from unseen threats and the intelligent guide revealing paths to untapped growth.

By identifying the unusual, you gain unparalleled insights into your operations, security, and customer behavior.

Don’t let hidden anomalies erode your profits or jeopardize your reputation.

Partner with Tatvic, and leverage our expertise in advanced analytics and machine learning to build robust anomaly detection capabilities tailored to your unique business challenges.

Transform your data into your most powerful defense and a dynamic engine for growth.

Ready to uncover hidden insights and protect your business?

👉 Contact Tatvic today for a consultation on implementing cutting-edge Anomaly Detection solutions.